As you flip through the pages of this chapter, get ready to delve into the heart of these game-changing AI marvels. We'll embark on a journey to understand what LLMs are, how they work, and the vast potential they hold for transforming various aspects of our lives. But before we dive in, let's set the stage with a simple question: what exactly are LLMs? Imagine a language model trained on a massive dataset of text and code, capable of generating human-quality text, translating languages, writing different kinds of creative content, and even answering your questions in an informative way. That's the essence of an LLM – that can process and understand information like never before.

This chapter will be your comprehensive guide to navigating the fascinating world of LLMs. We'll delve into their core concepts, exploring different types like autoregressive models and encoder-decoder models. You'll discover the magic behind self-attention, a mechanism that allows LLMs to focus on relevant information, and delve into the pre-training strategies that give them their vast knowledge. Finally, we'll showcase the real-world applications of LLMs, from powering chatbots and generating realistic dialogue to creating marketing copy and summarizing complex topics.

By the end of this chapter, you'll have a comprehensive understanding of LLMs, their inner workings, and the remarkable ways they are shaping the future of language and communication. So, prepare to be amazed by the power of these linguistic powerhouses!

In this chapter, we will cover the following topics :

Definition and overview of LLMs

Types of LLMs

Key technical concepts

Evaluating LLMs

Applications, Challenges Limitation

LLM Cheat Sheet

Definition and overview of LLMs

Imagine machines that converse like seasoned storytellers, translate languages with seamless fluency, and even craft poems that tug at your heartstrings. These aren't characters from science fiction, but a reality ushered in by a new breed of artificial intelligence: Large Language Models (LLMs).

LLMs are more than just advanced chatbots; they're sophisticated AI models trained on colossal datasets of text and code. Think of them as language titans, trained in libraries filled with novels, news articles, and even code repositories. This vast exposure allows them to grasp the nuances of human language, understand context, and even generate their own creative text formats.

But how do these titans function? At their core lies a complex neural network architecture called a transformer. Imagine this as a network of interconnected nodes, each processing and analyzing bits of information. Like a team of detectives piecing together clues, the network deciphers the relationships between words, learns their meanings, and ultimately predicts the next word in a sequence. This allows LLMs to understand the flow of language, generate responses that make sense, and even perform impressive feats like translating languages or summarizing text.

LLMs are no longer confined to research labs. They're actively shaping various industries, with applications ranging from generating personalized learning experiences in education to powering chatbots that offer natural language interactions in customer service. Imagine chatbots that understand your frustration and respond with genuine empathy, or AI assistants that summarize research papers in clear and concise language. These are just glimpses of the potential LLMs hold.

However, like any powerful tool, LLMs come with their own set of challenges. We need to be mindful of potential biases, the spread of misinformation, and the ethical considerations surrounding this technology. Responsible development and deployment are crucial to ensure LLMs are a force for good, amplifying human creativity and understanding while navigating the ethical landscape with care.As we delve deeper into the world of LLMs, remember this: they're not merely machines mimicking human language; they represent a fascinating intersection of artificial intelligence and human creativity, with the potential to reshape the way we interact with information and express ourselves. This journey has just begun, and the future holds exciting possibilities for the language titans we call LLMs.

Below chart shows the timeline of some of the most representative LLM frameworks (so far). In addition to large language models with our #parameters threshold, we included a few representative works, which pushed the limits of language models, and paved the way for their success (e.g. vanilla Transformer, BERT, GPT-1), as well as some small language models. ♣ shows entities that serve not only as models but also as approaches. ♦ shows only approaches.[LLM Survey arxiv.2402.06196]

Notable LLMs

The journey of LLMs hasn't been a straight shot. The story starts with simpler statistical models, followed by the groundbreaking Generative Pre-trained Transformer (GPT) in 2017. This was a turning point, introducing the transformer architecture that empowered more powerful LLMs. Then came 2018 with BERT, setting new records in NLP tasks and showcasing the power of pre-training, where models are exposed to vast amounts of text before tackling specific tasks. Finally, 2020 witnessed the arrival of GPT-3, a massive autoregressive LLM capable of generating remarkably human-like text, capturing the public's imagination and highlighting the potential and challenges of this technology.

The landscape of LLMs has rapidly evolved since 2020. These models range from web interfaces and APIs to completely accessible datasets, codebases, and model checkpoints. It is important to note that the terms for their commercial usage also differ. In this section, we highlight notable LLM models in chronological order, showcasing their unique features and contributions.

GPT-3 [API] was released by OpenAI in June 2020. The model contains 175 billion parameters and is considered one of the most important LLM milestones. It was the first model to demonstrate strong few-shot learning capabilities.

ChatGPT/GPT-3.5 [API] was released by OpenAI in November 2022. It extended GPT-3 in terms of both the data and underlying algorithms. For example, it used RLHF to boost model performance. ChatGPT was specifically designed for conversational use, offering a more natural and engaging chatbot experience.

GPT-4 [API] was released in March 2023. In addition to handling text, it can also take images as input. GPT-4 outperformed ChatGPT on many tasks, including passing the Bar exam. It also demonstrated better performance at safety tests (OpenAI, 2023).

LLaMA [checkpoint] was released by Meta in Feb 2023. It is a group of models with different numbers of parameters (7B, 13B, 33B, and 65B). Unlike GPT-X models that are accessible only through APIs, LLaMA provides implementation details of the model architecture and checkpoints. It has become a fundamental resource for subsequent open-source research..

Alpaca [checkpoint] was released by Stanford in March 2023. Alpaca was fine-tuned on top of LLaMA-7B using data generated by GPT-3. Remarkably, Alpaca demonstrated comparable performance to much larger models like GPT-3 (175B) while being smaller and more cost-effective to reproduce (less than $600).

Claude[API] was released by Alphabet-backed company Anthropic in March 2023. By adopting the Constitutional AI method proposed by Yuntao et al. (2022), Claude is reported to generate less toxic, biased, and hallucinatory responses. In May 2023, Anthropic expanded Claude’s context window from 9k to 100K. This is equivalent to around 75,000 words and allows the model to parse all the information from an entire book at once.

Falcon[checkpoint] was released by TII in May 2023. It is an open-source LLM with 40 billion parameters under the Apache 2.0 license. Falcon stands out due to the high quality of its training data, which includes 1,000 billion tokens from the RefinedWeb enhanced with curated corpora. At the time of writing, Falcon holds the top position on the Huggingface Open LLM Leaderboard.

Gemini Ultra released in 2024 , the version with Ultra will be called Gemini Advanced with a 540-billion parameter model, a new experience far more capable at reasoning, following instructions, coding, and creative collaboration. For example, it can be a personal tutor, tailored to your learning style. Or it can be a creative partner, helping you plan a content strategy or build a business plan.

NLP and its Connection to LLMs

Before we fully appreciate the magic of LLMs, we need to understand the language game they're playing: Natural Language Processing (NLP). Imagine NLP as the bridge between computers and the messy, wonderful world of human language. It's the art of teaching machines to understand, interpret, and even generate human language – a complex task filled with nuances and challenges.

At the heart of NLP lie various tasks, each posing its own unique hurdles. Let's explore some of the core areas:

Text classification: Can a machine categorize a document as news, a poem, or an email? LLMs tackle this by analyzing the text style and content.

Machine translation: Transforming words from one language to another accurately and preserving meaning is no easy feat. LLMs bridge this gap, understanding the context and intent to deliver fluent translations.

Question answering: Imagine asking a machine a question and getting a clear, informative answer. LLMs dive into vast amounts of information, seeking the answer you need amidst the sea of text.

Text summarization: Condensing a lengthy article into a concise summary without losing key points? LLMs are adept at identifying the main ideas and presenting them in a clear, digestible format.

Text generation: From crafting poems to writing scripts, LLMs can even generate creative text formats, pushing the boundaries of machine-made language.

These are just a few examples, and the list continues to grow. But what makes LLMs particularly adept at tackling these NLP challenges? Their secret lies in their training. Like master linguists, they're exposed to massive amounts of text and code, learning the intricacies of language through this exposure. This allows them to identify patterns, understand context, and ultimately perform these NLP tasks with impressive accuracy and creativity.

Think of it this way: imagine training a human translator by immersing them in different cultures and languages for years. That's essentially what happens with LLMs, except on a vastly accelerated scale. This training empowers them to tackle the complexities of NLP, pushing the boundaries of what machines can achieve with language.

In the following section, we'll delve deeper into how LLMs are used for specific NLP applications, showcasing their capabilities and exploring the exciting possibilities they hold for the future. Remember, the connection between NLP and LLMs is vital – understanding the tasks and challenges helps us appreciate the true power and potential of these language titans.

Exploring Different Types of LLMs

Now that we've peeked into the world of NLP and how LLMs use it, let's meet the different types of these language magicians! we explore two main categories:

Autoregressive Models

Autoregressive Language Models (e.g., GPT): Autoregressive models primarily use the decoder part of the Transformer architecture, making them well-suited for natural language generation (NLG) tasks like text summarization, generation, etc. These models generate text by predicting the next word in a sequence given the previous words. They are trained to maximize the likelihood of each word in the training dataset, given its context. The most well-known example of an autoregressive language model is OpenAI’s GPT (Generative Pre-trained Transformer) series, with GPT-4 being the latest and most powerful iteration. Autoregressive models based on decoder networks primarily leverage layers related to self-attention, cross-attention mechanisms, and feed-forward networks as part of their neural network architecture. It works by analyzing the words they've already created and predict what word would likely come next, based on their training data. Think of it as guessing the next word in a game of Mad Libs, but with way more complex calculations! Their training involves massive amounts of text, like books, articles, and even code. This exposure helps them understand language patterns and predict words that make sense, leading to surprisingly human-like text generation.

Specifically, given a text sequence

x=(x1,⋯,xT)

AR language modeling factorizes the likelihood into a forward product

p(x)=∏Tt=1p(xt∣x<t)

or a backward one

p(x)=∏1t=Tp(xt∣x>t)

Strengths and Limitations

They excel at storytelling, poem writing, and generating different creative text formats. Imagine them as AI bards, composing lyrics or scripts with impressive fluency. They can adjust their "voice" and style based on the context and prompts they receive. Think of them as chameleons of language, adapting to different genres and tones. AR language models are adept at generative NLP tasks because they utilize causal attention to predict the next token, making them naturally suited for content generation. One advantage of AR models is that generating data for them is relatively straightforward, as the training objective can simply be to predict the next token in a given corpus. However, it's important to note that while AR models consider either forward or backward context for each token prediction, they still incorporate bidirectional context by conditioning predictions on the entire sequence generated up to that point.

Popular autoregressive models

GPT, GPT-2, GPT-3, and CTRL: These models gained fame for their remarkable text generation capabilities.

Jukebox: Imagine creating music just by describing it! Jukebox uses the power of autoregression to compose melodies and lyrics.

Megatron-Turing NLG: This large model focuses on generating different creative text formats, like poems and code.

Autoencoding Language Models

Autoencoding Language Models (e.g., BERT): Autoencoding models, on the other hand, mainly use the encoder part of the Transformer. It’s designed for tasks like classification, question answering, etc. These models learn to generate a fixed-size vector representation (also called embeddings) of input text by reconstructing the original input from a masked or corrupted version of it. They are trained to predict missing or masked words in the input text by leveraging the surrounding context. BERT (Bidirectional Encoder Representations from Transformers), developed by Google, is one of the most famous autoencoding language models. It can be fine-tuned for a variety of NLP tasks, such as sentiment analysis, named entity recognition and question answering. Autoencoding models based on encoder networks primarily leverage layers related to self-attention mechanisms and feed-forward networks as part of their neural network architecture.

AELMs take a sentence as input and compress it into a smaller, latent representation. Think of it as summarizing the essence of the sentence in a condensed code. This code captures the key information and relationships between words.Once they have the compressed code, AELMs possess the remarkable ability to decode it back into a new sentence. This reconstructed sentence may not be identical to the original, but it retains the core meaning and conveys the same message.A notable example is BERT, which has been the state-of-the-art pretraining approach. Given the input token sequence, a certain portion of tokens are replaced by a special symbol [MASK], and the model is trained to recover the original tokens from the corrupted version. The AE language model aims to reconstruct the original data from corrupted input.

Strengths

1. Unsupervised Learning: AE models excel in unsupervised learning scenarios, where they autonomously learn the intricate patterns and structures of language from vast amounts of unannotated text data. This capability renders them remarkably versatile, capable of adaptation to a myriad of tasks without the constraints of task-specific labeled datasets.

2. Contextual Understanding: By reconstructing input sequences from corrupted or masked tokens, AE models cultivate a deep understanding of contextual relationships within language. This contextual awareness enables them to capture nuanced meanings and dependencies between words or tokens, facilitating more accurate and contextually relevant outputs.

3. Pretraining-Fine Tuning: AE models operate on a pretraining-finetuning paradigm, wherein they undergo initial training on large corpora of text data followed by fine-tuning on task-specific datasets. This approach facilitates transfer learning, where knowledge gleaned during pretraining is seamlessly transferred to downstream tasks, resulting in enhanced performance, particularly in scenarios with limited task-specific data.

4. Flexible Architecture: AE models offer flexibility in architecture design, ranging from Transformer-based structures like BERT to autoencoder architectures such as ALBERT. This adaptability empowers researchers to experiment with diverse architectures, tailoring them to specific use cases or computational resources.

Limitations

1. Masking-based Pretraining: AE models rely on token masking during pre training, which can introduce a discrepancy between the pre training and fine tuning phases. As masked tokens are absent during finetuning, this misalignment may impact performance on downstream tasks, necessitating careful consideration during model development.

2. Limited Context Window: AE models typically operate within a fixed context window size, constraining their ability to capture long-range dependencies in language. This limitation may lead to suboptimal performance, particularly in tasks requiring comprehension of extended contexts.

3. Fine-tuning Data Requirement: While AE models leverage transfer learning to enhance performance, achieving optimal results often necessitates a substantial amount of task-specific data for fine-tuning. In scenarios with limited or low-quality labeled data, the full benefits of pretraining may not be realized, requiring strategic resource allocation and data augmentation strategies.

4. Semantic Understanding: While proficient in capturing syntactic patterns and contextual relationships, AE models may encounter challenges in deeper semantic understanding, such as reasoning or inference. This limitation may constrain their performance on tasks demanding higher-level language comprehension and reasoning abilities.

Notable AELMs

BERT: This pioneer paved the way for AELMs by demonstrating their effectiveness in various NLP tasks.

BART: This versatile model excels at text summarization, translation, and even question answering.

XLNet: This powerful model uses a unique permutation language modeling approach, pushing the boundaries of AELM capabilities.

Encoder-decoder models

The third one is the combination of autoencoding and autoregressive such as the T5 (Text-to-Text Transfer Transformer) model. Developed by Google in 2020, T5 LLM can perform natural language understanding (NLU) and natural language generation (NLG). T5 LLM can be understood as a pure transformer using both encoder and decoder networks. It approaches every task as a conversion or generation task, treating them as sequences to sequences. This methodology extends beyond text-to-text tasks to encompass multimodal challenges like text-to-image or image-to-text conversions. For example, in text classification, the encoder accepts text input, while the decoder produces text labels instead of directly classifying them. Encoder-decoder or seq2seq models are commonly employed for tasks demanding both comprehension and generation, where information must be transformed from one format to another, like in machine translation.

Strengths

1. Versatility: Encoder-decoder models like BART and T5 demonstrate remarkable versatility, capable of addressing a wide range of natural language processing tasks, including but not limited to translation, summarization, question answering, and text generation. Their ability to handle diverse tasks within a unified framework simplifies model development and deployment.

2. Transfer Learning: These models excel at transfer learning, leveraging pretraining on large-scale datasets to acquire generalized language understanding and then fine-tuning on task-specific data to achieve state-of-the-art performance on various NLP tasks. This pretraining-fine tuning paradigm significantly reduces the need for large task-specific datasets and facilitates rapid development and deployment of NLP systems.

3. Bidirectionality and Contextual Understanding: Models like BART leverage bidirectional architectures, allowing them to capture contextual information from both past and future tokens. This bidirectional understanding enhances their ability to comprehend and generate coherent and contextually appropriate text, improving performance across a wide range of tasks.

4. Unified Text-to-Text Framework: T5's text-to-text approach simplifies model training and deployment by framing all tasks as text transformations. This unified framework eliminates the need for task-specific architectures or fine-tuning strategies, streamlining the development process and enabling seamless adaptation to new tasks.

Limitations

1.Computational Resources: Training and deploying encoder-decoder models like BART and T5 require significant computational resources, including high-performance GPUs or TPUs and large-scale datasets. This computational overhead may pose challenges for researchers or organizations with limited resources, hindering widespread adoption and deployment.

2.Interpretability: The complex nature of encoder-decoder models can make them less interpretable compared to simpler models. Understanding how these models arrive at their predictions, particularly for complex tasks like text generation, may be challenging, limiting their applicability in sensitive domains where interpretability is critical.

3.Data Efficiency: While encoder-decoder models excel at transfer learning, achieving optimal performance often requires large-scale pretraining datasets and task-specific fine-tuning datasets. In scenarios where labeled data is scarce or of low quality, the benefits of transfer learning may be limited, and models may struggle to generalize to new tasks or domains.

4.Robustness to Adversarial Inputs: Encoder-decoder models, like other deep learning models, may be susceptible to adversarial attacks, where small perturbations to input data can lead to incorrect or unintended outputs. Ensuring the robustness of these models to adversarial inputs remains an ongoing challenge in NLP research.

Notable encoder-decoder models

Examples of encoder-decoder models include T5, BART, and BigBird. These models excel in capturing the intricate relationships between inputs and outputs across diverse tasks, enabling versatile applications in natural language processing and beyond.

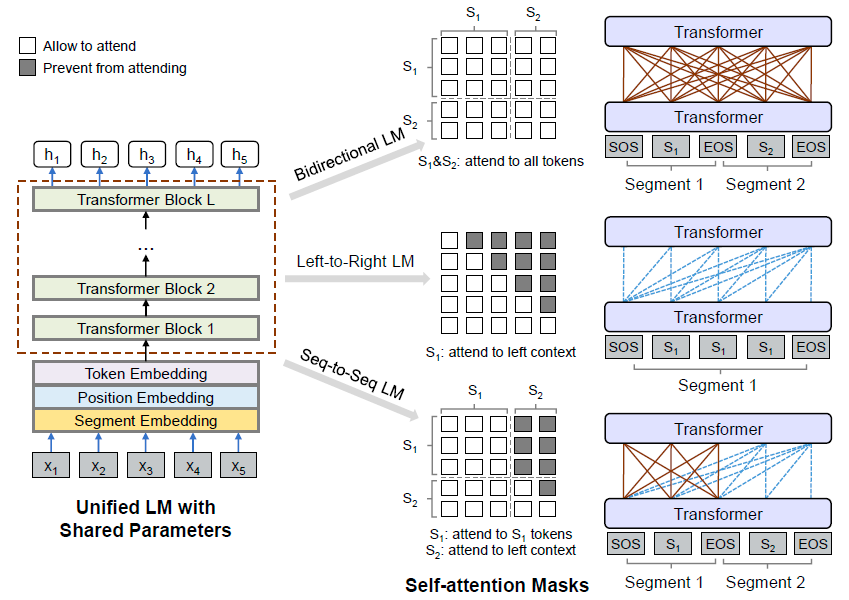

Overview of unified LM pre-training. The model parameters are shared across the LM objectives (i.e., bidirectional LM, unidirectional LM, and sequence-to-sequence LM). Courtesy of [Unified Language Model Pre-training arxiv.1905.03197].

Key Technical Concepts in LLMs

In this section, we'll explore two fundamental pillars: self-attention and pre-training objectives and strategies.

Self-attention

Decoding Relationships within Words.Imagine reading a sentence, not just word by word, but understanding how each word relates to the others. That's the essence of self-attention, a mechanism that empowers LLMs.It can capture dependencies and relationships within input sequences. It allows the model to identify and weigh the importance of different parts of the input sequence by attending to itself.Self-attention operates by transforming the input sequence into three vectors: query, key, and value. These vectors are obtained through linear transformations of the input. The attention mechanism calculates a weighted sum of the values based on the similarity between the query and key vectors. The resulting weighted sum, along with the original input, is then passed through a feed-forward neural network to produce the final output. This process allows the model to focus on relevant information and capture long-range dependencies.

Think of it like attending a party:

Guests: Words in the sentence.

Conversations: Connections and relationships between words.

Attention: Focusing on specific conversations (word relationships) relevant to understanding the overall meaning.

Self-attention allows LLMs to:

Identify crucial connections:: It analyzes all word pairs simultaneously, understanding how each word influences and interacts with others in the sentence.

Contextual awareness: By focusing on relevant relationships, LLMs gain a deeper understanding of the sentence's meaning, considering the context of each word.

Long-range dependencies: Unlike traditional models that struggle with long sentences, self-attention can capture relationships between words even if they're far apart in the sentence.

Self-attention in this sentence captures the relationship between "it" and "the fish," understanding that "it" refers back to the subject "the dog.

Pre-training: Learning Before the Task Begins

"Imagine sending a child to school without any prior knowledge. That's how traditional language models were trained – starting from scratch for each specific task. However, LLMs leverage a powerful technique called pre-training to gain foundational knowledge before tackling specific tasks.

Think of it like preparing for a job interview:

General knowledge building: LLMs are exposed to massive amounts of text data (books, articles, code), learning the building blocks of language and general relationships between words.

Adaptability: This pre-trained knowledge acts as a foundation, allowing LLMs to adapt and learn new tasks more efficiently.

Fine-tuning: Once the foundation is laid, LLMs are fine-tuned on specific tasks using smaller datasets relevant to that task. This refines their skills for targeted performance.

Below figure shows the pretraining and fine tuning BERT

Pre-training objectives and strategies are pivotal in shaping the capabilities and performance of LLMs. Here are some key concepts in this domain:

Masked Language Modeling (MLM): MLM involves randomly masking a percentage of tokens in the input sequence and training the model to predict the original tokens based on the context provided by the unmasked tokens. This objective, used in models like BERT, facilitates bidirectional learning and enables the model to capture contextual information effectively.

Autoregressive Language Modeling (ALM): ALM requires the model to predict the next token in a sequence given the preceding tokens. Models like GPT use ALM during pre-training, allowing them to generate coherent and contextually appropriate text.

Multi-Task Learning: Multi-task learning involves jointly training a model on multiple related tasks during pre-training. This approach enhances the model's ability to learn robust representations by leveraging the shared information across tasks.

Large-Scale Pre-Training Data: Pre-training LLMs on large-scale text corpora, such as Wikipedia articles or Common Crawl data, helps capture diverse linguistic patterns and semantics, leading to better generalization to downstream tasks.

Fine-Tuning and Transfer Learning: After pre-training, LLMs are fine-tuned on task-specific data or downstream tasks to adapt their representations to the specific characteristics of the task. Transfer learning from pre-trained LLMs has become a standard practice in NLP, enabling efficient development and deployment of models for various applications.

Below figure shows a high-level overview of GPT pretraining, and fine-tuning steps. Courtesy of OpenAI.

Applications of LLMs

In this chapter, we explore some of the key applications of LLMs, highlighting their capabilities and impact on tasks such as text generation, question answering, summarization, and translation.

Below Chart shows the capabilities of LLM [LLM Survey arxiv.2402.06196]

Text Generation

Text generation is one of the core capabilities of LLMs, allowing them to produce coherent and contextually relevant text based on given prompts or input sequences. By leveraging the contextual information encoded in their parameters, LLMs can generate text that exhibits characteristics similar to human-written language. This capability has numerous applications in various domains, including content creation, dialogue systems, and creative writing.

For example, LLMs like OpenAI's GPT series , Google’s Gemini Ultra have demonstrated remarkable proficiency in generating realistic and diverse text across a wide range of topics and styles. These models can be fine-tuned on specific datasets or prompts to tailor their output to particular domains or tasks, making them versatile tools for content generation.

Question Answering

LLMs excel at question answering tasks, where they are tasked with generating accurate and informative responses to user queries based on given context or knowledge sources. By leveraging their understanding of language semantics and context, LLMs can effectively extract and synthesize relevant information from large corpora or knowledge bases to answer questions across various domains.

let's consider a scenario where we want to generate a continuation of a given text prompt. We'll use the GPT-4 model as an example, which is capable of generating thousands of coherent words 2.

First, we need to prepare our environment and load the pre-trained model. Assuming we have a Python environment set up with the necessary libraries installed, we would load the model and its corresponding tokenizer using the Hugging Face Transformers library.We define a function to generate text based on a given prompt.

from transformers import AutoTokenizer, AutoModelForCausalLM

# Load Mixtral model and tokenizer

model_id = "mistralai/Mixtral-8x7B-Instruct-v0.1"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id)

# Define a function to generate text using Mixtral

def generate_text(prompt, model, tokenizer):

inputs = tokenizer(prompt, return_tensors="pt")

output = model.generate(inputs, max_length=100, num_return_sequences=1)

return tokenizer.decode(output[0], skip_special_tokens=True)

# Use the function to generate text

prompt = "The quick brown fox jumps over the lazy dog."

generated_continuation = generate_text(prompt, model, tokenizer)

print(generated_continuation)

This code snippet will print out a continuation of the given text prompt using the Mixtral-8x7B model. Note that the actual text generated will depend on the specific model and its training data.Additionally, to fine-tune the LLM for specific tasks, one might use the Hugging Face TRL, Transformers, and Datasets libraries. The fine-tuning process involves setting up training arguments, loading a pre-trained model, and then training it on a custom dataset using the SFTTrainer. After training, the model can be evaluated to measure its performance on a validation set.

Summarization

LLMs play a crucial role in automatic summarization, where they are employed to generate concise and informative summaries of longer documents or text passages. By distilling the essential information from input texts, LLMs enable users to quickly grasp the key points and main ideas without having to read through the entire document.

Models like Google's Pegasus and Hugging Face's Bart have demonstrated impressive performance on abstractive summarization tasks, where they generate summaries that go beyond mere extraction of sentences and instead produce coherent and contextually relevant summaries. These models leverage their understanding of language semantics and structure to ensure the fluency and coherence of the generated summaries, making them valuable tools for content summarization and information extraction tasks.

#pip install transformers

import logging

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM, pipeline

# Function to load the Llama model

def load_model(model_id):

logging.info(f"Loading Model: {model_id}")

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForSeq2SeqLM.from_pretrained(model_id)

summarization_pipeline = pipeline('summarization', model=model, tokenizer=tokenizer)

logging.info("Model loaded successfully.")

return summarization_pipeline

# Function to summarize text

def summarize_text(text, summarization_pipeline):

summary = summarization_pipeline(text, max_length=150, min_length=40, do_sample=False)

return summary[0]['summary_text']

# Example usage

model_id = "meta-llama/Llama-2-7b-hf"" model ID for summarization

summarization_pipeline = load_model(model_id)

text_to_summarize = """

A long document or passage that needs to be summarized goes here. This could be a news article, academic paper, or any lengthy piece of text. The goal is to condense the information into a shorter version that captures the main points without losing the essence of the original content.

"""

summary = summarize_text(text_to_summarize, summarization_pipeline)

print(summary)

You can use the following code to load the Llama model and perform text summarization.The summarize_text function takes the text you want to summarize and the summarization pipeline you've set up. It returns the summarized text.

Translation

LLMs have transformed the field of machine translation, enabling the development of more accurate and fluent translation systems. By leveraging their multilingual capabilities and knowledge of language semantics, LLMs can translate text between different languages with remarkable accuracy and fluency.

For example, models like Google's T5 and Facebook's Marian have demonstrated state-of-the-art performance on multilingual translation tasks, outperforming traditional machine translation systems in terms of translation quality and fluency. These models can effectively capture the nuances and idiosyncrasies of different languages, producing translations that are natural-sounding and contextually appropriate.

According to the LLM Leaderboard Best Models list on Hugging Face, the model alchemonaut/QuartetAnemoi-70B-t0.0001 is currently considered the best base model for translation tasks. To use this model for translation, you would set up your Python environment with the necessary libraries and then load the model along with its tokenizer using the Hugging Face Transformers library.

Here's an example of how to use the QuartetAnemoi-70B model for translation:

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM, pipeline

# Set up the model and tokenizer

model_name = "alchemonaut/QuartetAnemoi-70B-t0.0001"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

# Create the translation pipeline

translation_pipeline = pipeline('translation_en_to_de', model=model, tokenizer=tokenizer)

# Translate text from English to German

english_text = "Translate this text to German."

translated_text = translation_pipeline(english_text)[0]['translation_text']

print(translated_text)

In this example, we assume a translation pipeline for English to German ('translation_en_to_de'). You would need to adjust the pipeline string according to the language pair you are working with. The QuartetAnemoi-70B model is selected based on its standing on the leaderboard, which suggests it performs well in translation tasks 1.

Remember to replace 'translation_en_to_de' with the appropriate pipeline for your desired language pair. Additionally, ensure that you have the necessary permissions and resources to use this model, considering its size and the computational requirements involved in running such a large model for translation tasks.

Limitation and Challenges of LLM

Indistinguishability between Generated and Human-Written Text: Large Language Models (LLMs) can generate text that is so fluent and coherent that it is often indistinguishable from human-written content. This poses a significant challenge as it becomes difficult to tell if the output is from a human or the model itself. Detecting LLM-generated text is crucial for several reasons, including combating misinformation, preventing plagiarism, and thwarting impersonation and fraud. Approaches to detecting LLM-generated text include visualizing statistically improbable tokens, using energy-based models, and examining authorship attribution problems. Techniques such as watermarking, which involves adding hidden patterns to generated text, have been investigated for their effectiveness in identifying LLM-generated text. However, adversaries can attempt to evade detection by rephrasing the generated text to remove distinctive signatures, a challenge known as paraphrasing attacks.

Biases and Toxicity: LLMs can inherit biases from their training data, which can lead to biased outputs. This is particularly concerning because if the training data contains toxic content, such as hate speech or discriminatory language, the LLM can also generate such content. Mitigation strategies for these biases include fine-tuning the model with human preferences or instructions and the development of toolkits for debiasing models. It is also important to ensure that the model does not generate inappropriate or harmful content, which can be a significant ethical concern.

Prompt Injections and Agency: LLMs can be sensitive to prompt injections, which can lead to unsafe behavior. This is because the responses generated by LLMs are based on the prompts given to them, and if the prompts are manipulated, the model can produce outputs that are not aligned with human values or intentions. Additionally, LLMs lack a sense of agency and do not have the ability to understand the implications of their actions, which can lead to misaligned responses. Addressing these challenges requires a combination of technical innovation, ethical considerations, and ongoing research efforts.

Unfathomable Datasets: The large size of pre-training datasets used for LLMs makes it nearly impossible for individuals to assess the content thoroughly. This issue, referred to as "Unfathomable Datasets," is a significant challenge because the sheer volume of data can lead to problems with data quality, duplication, and contamination. Issues with near-duplicates in the data can harm model performance, and benchmark data contamination arises when the training data overlaps with the evaluation test set, leading to inflated performance metrics. Addressing these challenges is crucial to ensure accurate, diverse, and bias-free data.

Privacy Concerns: Personally Identifiable Information (PII) has been found within pre-training data, which can cause privacy breaches during prompting. This is a significant concern because the use of PII in LLMs can lead to the disclosure of sensitive information, and it is essential to address this issue to protect the privacy of individuals.

Contextual Understanding: LLMs can struggle with understanding context, which can lead to incorrect or nonsensical responses. This is because LLMs generate responses based on patterns learned from their training data, and if the context of a prompt is not clearly defined or is complex, the model may not be able to generate an appropriate response. Overcoming this challenge requires the development of models that can better understand the context in which they are operating.

Generating Misinformation: LLMs can generate content that is factually incorrect or misleading because they generate responses based on patterns learned from their training data. This can lead to the spread of misinformation, which is a significant concern, especially in the context of information dissemination and decision-making. Addressing this challenge requires the development of techniques to detect and mitigate misleading or false outputs.

Ethical Concerns: LLMs can be used to generate deep fake text or to automate the creation of misleading news articles or propaganda. This raises serious ethical concerns, as the use of LLMs in this manner can be used to manipulate public opinion and spread misinformation. Addressing these ethical concerns requires a combination of technical innovation, ethical considerations, and ongoing research efforts.

Lack of Creativity: LLMs are pattern recognition systems and do not truly understand or create new content in the same way a human would. This can be a limitation in situations where creativity and novelty are required. Addressing this challenge requires the development of models that can generate content that is not only accurate and relevant but also creative and novel.

Controllability: Controlling the output of LLMs is a significant challenge. They can sometimes generate content that is inappropriate, biased, or factually incorrect. This can lead to unintended or harmful outcomes, and it is important to develop methods to control the behavior of LLMs and ensure that they generate outputs that are aligned with human values and objectives.

Quality of Generated Text: The quality of the output from LLMs can sometimes be inconsistent, producing outputs that are nonsensical or even harmful. This can affect applications like content moderation, information dissemination, and decision-making, underscoring the importance of addressing these issues. Strategies such as pre-training with human feedback, efficient fine-tuning methods, watermarking, and robust evaluation techniques help mitigate challenges and enhance the usability of LLMs.

Adaptability: While LLMs can handle a wide variety of tasks, they are not specifically designed for fine-tuning, which can make them less adaptable to specific tasks. This can be a limitation in situations where a model needs to be adapted to a specific task or domain. Addressing this challenge requires the development of more efficient fine-tuning methods and the exploration of techniques like pre-training domain mixtures and fine-tuning task mixtures.

Evaluating LLMs

Evaluating Large Language Models (LLMs) is a multifaceted process that goes beyond mere performance metrics. It encompasses the assessment of accuracy, safety, and fairness, ensuring that LLMs are not only effective but also ethically sound and user-friendly.

- Bias and Ethical Oversight: Evaluations are crucial for spotlighting biases and controversial outputs, thereby encouraging ethical AI practices and fostering unbiased AI solutions. They serve as a check, ensuring that the outputs of LLMs do not propagate harm, misinformation, or biases, and that the models uphold ethical guidelines.

- Boosting User Experience: Ensuring that AI-generated content aligns with user needs is a key aspect of LLM evaluations. By validating that AI consistently aligns with societal expectations, trust is built, enhancing user-AI engagement.

- Versatility and Expertise: Assessments of LLMs reveal their breadth across topics, adaptability to various writing styles, and domain proficiency. This is vital for understanding the model's versatility and its ability to perform in different contexts, whether it's in legal jargon, medical terms, or technical writing.

- Meeting Regulatory Standards: Evaluations ascertain that LLMs meet the prevailing legal and ethical benchmarks. This is essential for ensuring that the models operate within the legal framework and do not infringe on user privacy or data security standards.

- Spotting Shortcomings: Evaluations help identify weaknesses in LLMs, whether in nuanced understanding or intricate query resolution. This is a critical step in the development process, allowing for improvements and refinements to be made.

- Real-world Validation: Practical tests are essential for validating the real-world utility of LLMs. These tests prove the worth of LLMs in actual scenarios, beyond controlled environments, which is vital for assessing their effectiveness in real-world applications.

- Upholding Accountability: Evaluations ensure responsible AI releases and hold creators accountable for their outputs. This is a critical aspect of maintaining trust in AI technologies and ensuring that they are developed and deployed responsibly.

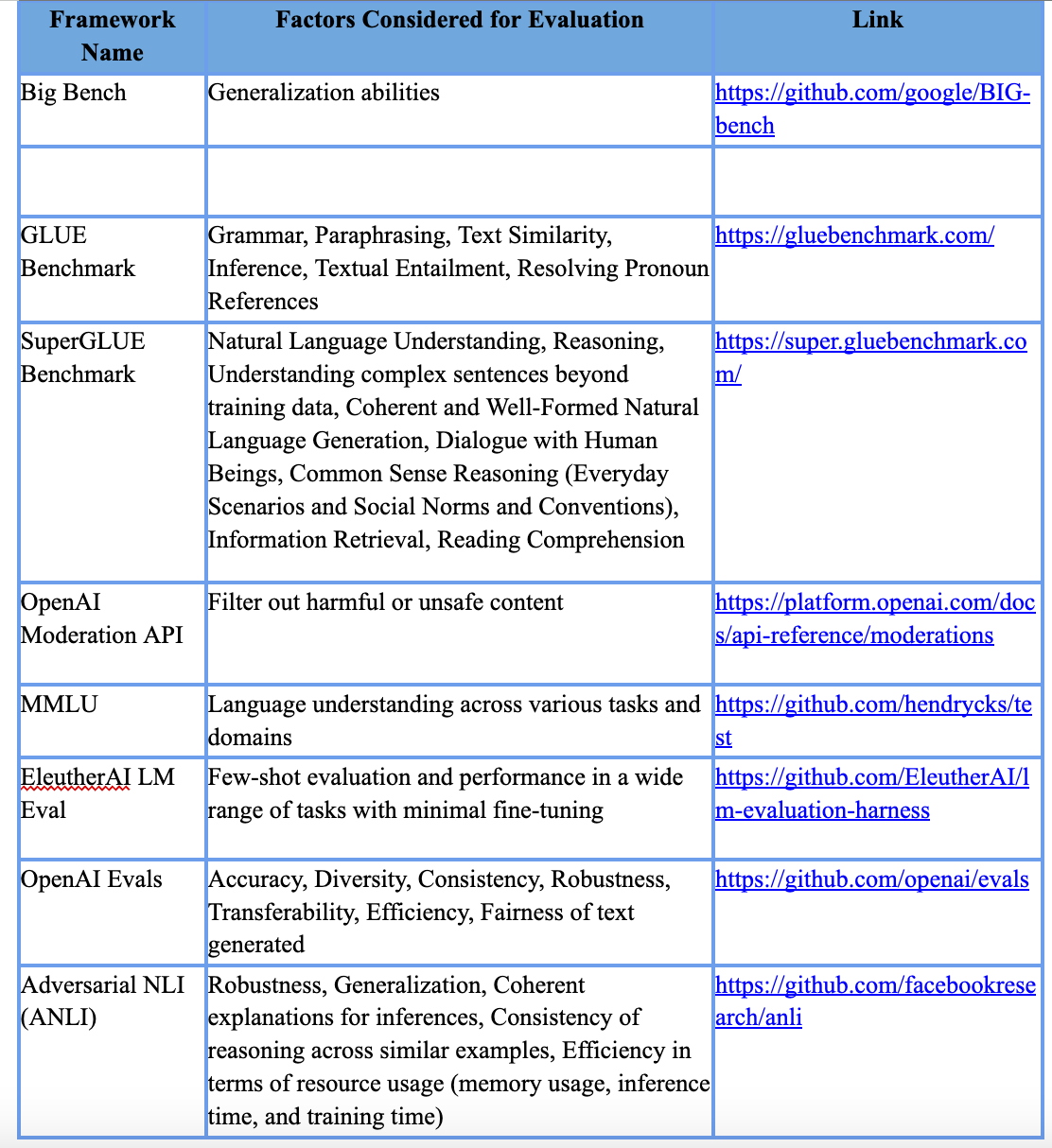

Evaluation methods for LLMs can be subjective and time-consuming, especially when using human evaluators. Therefore, it's important to combine human evaluation with quantitative metrics for a comprehensive and efficient evaluation process. Human evaluation provides a qualitative depth, but it can be subjective and vary based on individual perspectives and biases. It can also be time-consuming and expensive compared to automated metrics.To address these challenges, Zero-shot Evaluation is used, where the model is tested on tasks it has not been trained on. This method provides a more realistic measure of the model's capabilities in the real world. Additionally, Performance Assessment involves evaluating how well LLMs generate text and respond to input, using metrics such as accuracy, fluency, coherence, and subject relevance.Model Comparison is another important aspect of LLM evaluation, helping researchers and practitioners compare different models and measure progress. This aids in the selection of the most appropriate model for a given application.Benchmarking Steps for evaluating LLM performance include model evaluation, where models are measured based on their ability to generate accurate, coherent, and contextually appropriate responses, and comparative analysis, where the evaluation results are analyzed to compare the performance of different LLM models on each benchmark task.Common Evaluation Methods include perplexity, human evaluation, BLEU, ROUGE, and diversity measures. However, these methods have their limitations, such as subjectivity, high cost of human evaluations, limited reference data, lack of diversity metrics, and generalization to real-world scenarios.Best Practices for assessing LLMs include using diverse datasets, multi-faceted evaluation, real-world testing, regular updates, feedback loops, inclusive evaluation teams, open peer review, continuous learning, scenario-based testing, and ethical considerations. Evaluation frameworks like Big Bench, GLUE Benchmark, and SuperGLUE are essential for measuring and benchmarking model capabilities, helping in pinpointing a model's strengths, weaknesses, and performance across diverse contexts.

Challenges with Current Evaluation Techniques include inconsistency across evaluations, which might yield different results for the same model, making it difficult to derive a consistent understanding of an LLM's capabilities. In the evolving world of NLP, accurately evaluating LLMs is crucial. Future evaluation methodologies will likely accentuate context, emotional resonance, and linguistic subtleties, with ethical considerations taking center stage.

Evaluation metrics and framework

G-Eval: This framework uses LLMs to evaluate LLM outputs by generating a series of evaluation steps and then using these steps to determine a final score. It requires several pieces of information to work and can generate a reason for its evaluation score.

GPTScore: This metric uses the conditional probability of generating the target text as an evaluation metric, differing from G-Eval which directly performs the evaluation task.

Langchain: Langchain provides various types of evaluators, including string evaluators for assessing the accuracy of predicted strings, trajectory evaluators for analyzing decision-making processes, and comparison evaluators for contrasting outcomes of two different runs on the same input.

LLM-Eval: This method evaluates multiple dimensions of conversation quality using a single LLM prompt, offering a robust solution with a high correlation with human judgments across diverse datasets.

LLM-as-a-judge: This approach uses LLMs as a surrogate for human evaluation, demonstrating that LLM judges can achieve an agreement rate exceeding 80% with human evaluations.

Deep-Eval: An open-source evaluation framework that offers a variety of default metrics like Hallucination, Answer Relevance, Bias, Toxicity, and more, and allows for custom metric creation.

Llama-Index: Offers evaluation tools for RAG applications, including response evaluation to ensure the response is in line with the retrieved context and retrieval evaluation to gauge the relevance of retrieved sources to the original query.

RAGAS: Incorporates traditional NLP metrics like BERTScore and NLI for well-rounded evaluations and uses self-consistency methods to ensure reliability in evaluation results.

These metrics and frameworks are designed to assess various aspects of LLM performance, including coherence, relevance, bias, and more, providing a comprehensive evaluation of the model's output.

LLM Books

In Chapter - Introduction to NLP, we encountered a handful of books. Here's a refined selection for you to jumpstart your LLM journey.

1. Practical Natural Language Processing - O'Reilly by Sowmya Vajjala, Bodhisattwa Majumder, Anuj Gupta, Harshit Surana - This book is a comprehensive guide to the practical aspects of NLP, including the use of LLMs. It covers a wide range of topics from the basics of NLP to advanced techniques for processing and generating natural language.

2. Natural Language Processing with Transformers - O'Reilly by Lewis Tunstall, Leandro von Werra, Thomas Wolf - This book dives into the use of transformer models, which are the backbone of many LLMs like GPT and BERT. It provides a detailed exploration of transformer architectures and their applications in NLP tasks.

3. Transformers for Natural Language Processing - Packt by Denis Rothman - This book focuses on the transformer models that are central to LLMs, offering an in-depth look at how these models can be used for NLP tasks. It covers both the theoretical aspects of transformers and their practical applications.

4. GPT-3: Building Innovative NLP Products Using Large Language Models - O'Reilly by Sandra Kublik and Shubham Saboo - This book is specifically about GPT-3, one of the most well-known LLMs. It provides insights into how to build NLP products using GPT-3 and offers practical examples and case studies.

5. Hands-On Generative AI with Transformers and Diffusion Models -O'Reilly by Pedro Cuenca, Apolinario Passos, Omar Sanseviero, Jonathan Whitaker - This book is a hands-on guide to working with generative AI models, including LLMs and diffusion models. It offers practical exercises and examples to help readers get started with these advanced AI techniques.

6. Quick Start Guide to Large Language Models by Sinan Ozdemir - The Practical, Step-by-Step Guide to Using LLMs at Scale in Projects and Product.Large Language Models (LLMs) like ChatGPT are demonstrating breathtaking capabilities, but their size and complexity have deterred many practitioners from applying them. In Quick Start Guide to Large Language Models, pioneering data scientist and AI entrepreneur Sinan Ozdemir clears away those obstacles and provides a guide to working with, integrating, and deploying LLMs to solve practical problems.

7.Understanding Large Language Models: Learning Their Underlying Concepts and Technologies by Thimira Amaratunga - This book will teach you the underlying concepts of large language models (LLMs), as well as the technologies associated with them.The book starts with an introduction to the rise of conversational AIs such as ChatGPT, and how they are related to the broader spectrum of large language models. From there, you will learn about natural language processing (NLP), its core concepts, and how it has led to the rise of LLMs. Next, you will gain insight into transformers and how their characteristics, such as self-attention, enhance the capabilities of language modeling, along with the unique capabilities of LLMs. The book concludes with an exploration of the architectures of various LLMs and the opportunities presented by their ever-increasing capabilities—as well as the dangers of their misuse.

8. Generative AI with LangChain: Build large language model (LLM) apps with Python, ChatGPT, and other LLMs by Ben Auffarth - Get to grips with the LangChain framework to develop production-ready applications, including agents and personal assistants. Code examples are regularly updated on GitHub to keep you abreast of the latest LangChain developments.With this book you learn how to leverage LLMs’ capabilities and work around their inherent weaknesses Delve into the realm of LLMs with LangChain and go on an in-depth exploration of their fundamentals, ethical dimensions, and application challenges.Get better at using ChatGPT and GPT models, from heuristics and training to scalable deployment, empowering you to transform ideas into reality

9. Generative AI on AWS - O'Reilly by Chris Fregly, Antje Barth, Shelbee Eigenbrode - Companies today are moving rapidly to integrate generative AI into their products and services. But there's a great deal of hype (and misunderstanding) about the impact and promise of this technology. This book authors from AWS help CTOs, ML practitioners, application developers, business analysts, data engineers, and data scientists find practical ways to use this exciting new technology.

LLM Libraries

LangChain - LangChain is a framework for developing applications that use language models. LangChain provides modules for models, prompts, indices, chains, and agents, with the ability to "chain" these modules together.

LlamaIndex - LlamaIndex (fka GPT Index) is a framewor for augmenting LLMs with private/custom data. It offers data connectors, indices/graphs that are LLM-compatible, and a query interface over the data.

LMFlow is an extensible toolbox for finetuning large language models, including LLMs. It supports common backbones such as LLaMA and GPT-.

Alpaca Farm - AlpacaFarm is a framework for research and development of systems that learn from human feedback (such as instruction-following LLMs). It contains code for simulating preference feedback, automated evaluation, and reinforcement learning algorithms such as PPO.

Flax - Flax is a neural network library for JAX(a library that provides composable differentiation and vectorization operations for the Python ecosystem) that is designed for flexibility.

GGML - GGML is a tensor framework for enabling large models to be run on commodity software. It provides integer quantization of models, support for different platforms and intrinsics, and web support. Popular libraries based on GGML include llama.cpp and whisper.cpp.

Hugging Face - Hugging Face is the Github for machine learning. Hugging Face provides the popular Transformers library for working with the Transformer model, as well as as its hub for machine learning models and datasets.

Lamini - Lamini is an LLM platform that allows developers to build and run their own custom, private LLMs. Capabilities include fine-tuning, RLHF, and optimizations so the self-hosted LLM runs efficiently.

MLC LLM - MLC LLM allows language models to be optimized and deployed natively on a broad set of hardware and native applications. Supported platforms include iOS, Android, Apple Silicon, AMD, Intel, NVIDIA, and WebGPU.

GPTCache: A library for creating a semantic cache to store responses from LLM queries

Haystack: A library for quickly composing applications with LLM Agents, semantic search, question-answering, and more

LangFlow: An effortless way to experiment and prototype LangChain flows with drag-and-drop components and a chat interface

LangKit: An out-of-the-box LLM telemetry collection library that extracts features and profiles prompts, responses, and metadata about how your LLM is performing over time

LiteLLM: A simple and lightweight package to standardize LLM API calls across various API endpoints

LLMApp: A Python library that helps you build real-time LLM-enabled data pipelines with few lines of code

LLMFlows: A framework for building simple, explicit, and transparent LLM applications such as chatbots, question-answering systems, and agents

LLMonitor: A library for observability and monitoring for AI apps and agents, offering powerful tracing and logging, usage analytics, and deep dives into request histories

Magentic: A library that seamlessly integrates LLMs as Python functions and allows mixing LLM queries and function calling with regular Python code

Parea AI: A platform and SDK for AI Engineers providing tools for LLM evaluation, observability, and a version-controlled enhanced prompt playground

OpenAI: A library that provides access to the capabilities of large language models, facilitating tasks such as text generation, translation, and more.

Cohere: A library that offers access to a range of language models for various natural language processing tasks.

Pinecone: A library that provides tools for indexing and retrieving information from LLMs ChatOpenAI: A library that facilitates the creation of chatbots and other interactive applications using LLMs

.

LLM CheatSheet

Purpose

The purpose of the LLM Cheat Sheet is to provide a quick and easy-to-use reference guide for NLP practitioners. It covers a wide range of topics related to language modeling and provides a high-level overview of the most essential concepts and techniques in the field. These models are designed to process natural language data and perform various tasks, including text generation, translation, sentiment analysis, and more.

Key Concepts

Some key concepts to understand when working with large language models include:

Preprocessing: The input data must be preprocessed before training a language model. This involves cleaning the text, tokenizing it into individual words or subwords, and encoding it in a format that can be fed into the model.

Fine-tuning: Large language models are often trained on large datasets, but they can also be fine-tuned on smaller, domain-specific datasets to improve their performance on specific tasks.

Generation: Language models can generate text by predicting the next word in a sequence, or by sampling from a distribution of possible words.

Translation: Language models can be used for machine translation by encoding text in one language and decoding it into another language.

Sentiment Analysis: Language models can be used for sentiment analysis by predicting the sentiment of a piece of text, such as whether it is positive, negative, or neutral.

Tools and Libraries: There are many tools and libraries available for working with large language models.

Quiz questions

Here is a quiz to assess understanding of the chapter on Large Language Models (LLMs)

1. What is the primary purpose of large language models (LLMs)?

A) To understand and generate human-like language

B) To predict the next word in a sequence

C) To perform specific tasks like translation or summarization

D) All of the above

2. What are autoregressive models in the context of LLMs?

A) Models that generate fixed-size vector representations of input text

B) Models that predict the next word in a sequence given the previous words

C) Models that focus on different parts of the input sequence during computation

D) Models that use subword algorithms like BPE or WordPiece

3. What does the self-attention mechanism in transformer architecture allow the model to do?

A) Predict the next word in a sequence

B) Focus on different parts of the input sequence during computation

C) Analyze its own performance and make adjustments

D) Improve the generalization capabilities of a model by training it on diverse datasets

4. What are the key technical concepts in LLMs related to transformer architecture?

A) Self-attention

B) Pre-training objectives and strategies

C) Both A and B

D) Neither A nor B

5. Which of the following is an application of large language models?

A) Sentiment analysis

B) Question answering

C) Automatic summarization and Machine Translation

D) All of the above

6. What are the pre-training objectives and strategies in LLMs?

A) Training on a large dataset, usually unsupervised or self-supervised, before fine-tuning for a specific task

B) Training on a small dataset, usually supervised, for a specific task

C) Training on a large dataset, usually supervised, for a specific task

D) Training on a small dataset, usually unsupervised or self-supervised, before fine-tuning for a specific task

7. What is the main characteristic of autoregressive language models like GPT-3?

A) They generate fixed-size vector representations of input text

B) They predict the next word in a sequence given the previous words

C) They focus on different parts of the input sequence during computation

D) They use subword algorithms like BPE or WordPiece

8. What is the main goal of an autoencoding language model like BERT?

A) To generate fixed-size vector representations of input text

B) To predict the next word in a sequence given the previous words

C) To focus on different parts of the input sequence during computation

D) To use subword algorithms like BPE or WordPiece

9. Which LLM is an example of a combination of autoencoding and autoregressive language models?

A) Turing NLG

B) GPT series

C) BERT

D) T5

10. What does the term 'temperature' refer to in the context of LLMs?

A) A parameter that affects the randomness of the output of the softmax layer

B) A measure of the size of the model

C) A parameter that controls the length of the generated text

D) A parameter that controls the complexity of the model's predictions

11. What is the faithfulness metric in RAG evaluation used for?

A) To measure the length of the LLM output

B) To evaluate whether the LLM output factually aligns with the retrieval context

C) To assess the speed of the LLM application

D) To measure the complexity of the LLM output

12. Which of the following LLM evaluation metrics is not purely statistical and takes into account semantics and reasoning capabilities?

A) BLEU

B) QAG Score

C) GPTScore

D) Levenshtein distance

Correct Answers:

1. D

2. B

3. B

4. C

5. D

6. D

7. B

8. A

9. C

10. A

11. B

12. C