Build and Deploy LLM Application in AWS

AWS Lambda — BedRock — LangChain

AWS Lambda — BedRock — LangChain

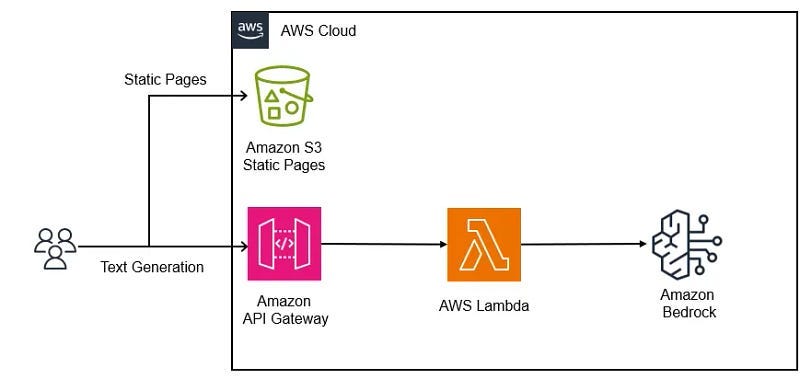

This article helps to explore the resource and implementation details that we covered in above tutorial, we’ll explore how to build and deploy a Large Language Model (LLM) application on AWS Lambda, leveraging the power of BedRock and LangChain. This application will allow users to ask natural language questions.

We collaborated with Chetan Hirapara on this tutorial. Feel free to check out his Linkedin profile.

We have covered the following topic in the tutorial:

Introduction

AWS Lambda is a serverless computing service that runs your code in response to events and automatically manages the underlying compute resources for you. BedRock is a service that provides serverless access to foundational models, including LLMs, while LangChain is a framework that integrates with these models to facilitate conversational interactions.

Bedrock and Lambda Service

To start, we’ll set up our AWS Lambda function to interact with BedRock. BedRock allows us to access a variety of LLMs, including those developed by leading AI startups like AI21 Labs, Anthropic, and Cohere. This setup is crucial for our application, as it enables us to leverage the text generation and analysis capabilities of these models.

Create Lambda Function

Next, we’ll create a Lambda function that will handle the processing of user queries.

Code and Implementation

import boto3

from langchain_community.embeddings import BedrockEmbeddings

from langchain.llms.bedrock import Bedrock

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

# Bedrock Client Setup

bedrock = boto3.client(service_name="bedrock-runtime", region_name="us-east-1")

def get_llama2_llm():

llm = Bedrock(

model_id="meta.llama2-13b-chat-v1",

client=bedrock,

model_kwargs={"max_gen_len": 512},

)

return llm

def get_response_llm(llm, query):

prompt_template = """<s>[INST] You are a helpful, respectful and honest assistant.

{question} [/INST] </s>

"""

prompt = PromptTemplate(

template=prompt_template, input_variables=["question"]

)

chain = LLMChain(llm=llm, prompt=prompt)

return chain.invoke(query)

# Lambda Handler

def lambda_handler(event, context):

user_question = event.get("question")

llm = get_llama2_llm()

response = get_response_llm(llm, user_question)

print("response: ", response)

return {"body": response, "statusCode": 200}Create and Add Custom Lambda Layer

To enhance our application, we might need to add custom Lambda layers. These layers can include additional libraries or dependencies that our Lambda function requires. For example, we might need to include the `boto3` library for interacting with AWS services or the `langchain` library for integrating with LangChain.

Test

Once the Lambda function is deployed with the necessary layers, we’ll test it again to ensure it’s correctly processing user queries and generating responses. This involves sending prompts to the backend and verifying that the LLM generates appropriate responses.

In summary, building and deploying a serverless LLM application on AWS Lambda using BedRock and LangChain involves setting up a Lambda function, deploying it, and testing it to ensure it can process user queries and generate responses. This solution combines the capabilities of LLMs and semantic search to answer natural language questions , serving as a blueprint for further generative AI use cases.

📚 Resources

- ARN for popular packages: https://api.klayers.cloud/api/v2/p3.11/layers/latest/us-east-1/html

- Amazon ECR Public Gallery — SAM: https://gallery.ecr.aws/sam/build-python3.10

- Official AWS Documentation: https://aws.amazon.com/blogs/compute/building-a-serverless-document-chat-with-aws-lambda-and-amazon-bedrock/

- Docker: https://docs.docker.com/desktop/install/mac-install/

Conclusion

This tutorial provides a comprehensive guide to building and deploying a serverless LLM application on AWS Lambda, leveraging the power of BedRock and LangChain. By following these steps, you’ll be able to create an application that allows users to ask natural language questions, showcasing the potential of serverless computing and generative AI.

Connect with me on Linkedin

Find me on Github

Visit my technical channel on Youtube

Thanks for Reading!